Do you want to scrape tweets about a certain topic every morning? Or do you want to download all Instagram posts from an account every morning? Then this blog is for you. In this blog we are talking about “automating OSINT“: automating tasks in an investigation.

The case: the daily download of tweets about a certain topic

Let’s say you’re interested in getting tweets about farmers’ protests every day. Then you can do that manually via the Twitter search bar as shown on the right, but of course that doesn’t get you very far. Especially not when there are a lot of posts about the topic you are researching. Time for a different, automated solution.

An automated solution: a web scraper

Besides the fact that you can use an Application Programming Interface (API) on some websites and social media to collect data, you can also use so-called web scrapers. With the help of a web scraper you can selectively extract certain data from a web page and then store it in a structured way for further processing and analysis. There are many (paid) software packages and browser extensions that use web scraping, but we prefer to use open source tools. The reason for this is that the source code of these tools is public, which allows us to assess whether their use poses any risk of harm in our research.

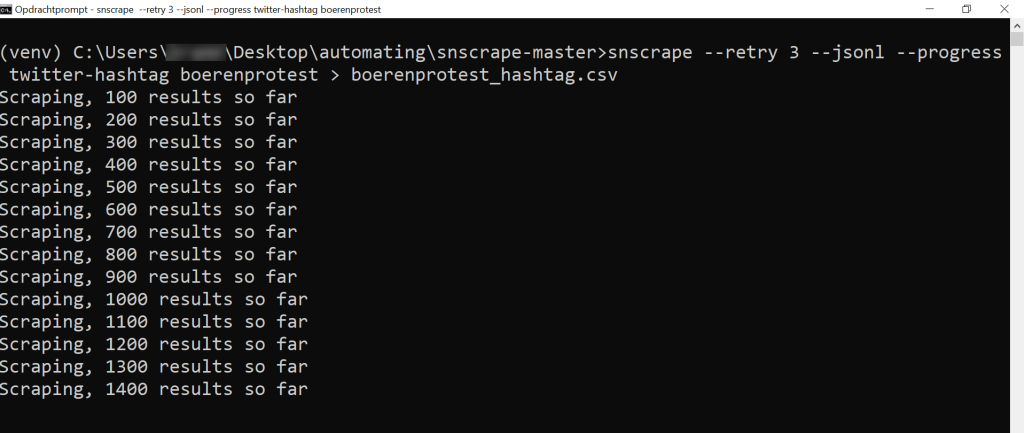

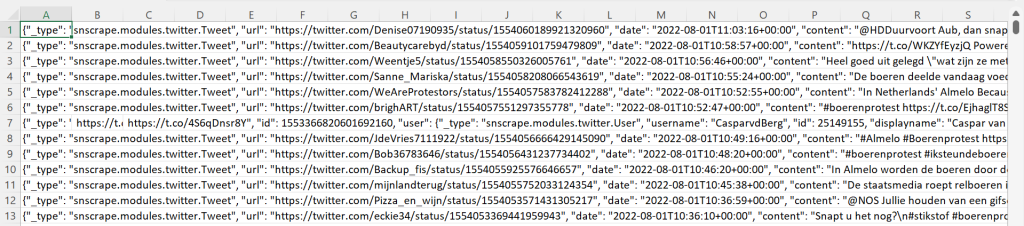

Two interesting tools for scraping messages on Twitter are Twint and snscrape. For example, after installing Python, and after installing the snscrape tool, you can retrieve all messages about #farmersprotest with the following command: snscrape –retry 3 –jsonl –progress twitter-hashtag boerenprotest > boerenprotest_hashtag.csv

The result looks like shown below. Of course, the results still need to be cleaned up in order to be able to process and analyze them further, but that’s not what this blog is about. What this blog is about is automating the above process. In other words: automatically running the above script.

Run the web scraper daily (Windows)

To run the above script daily, you can use the task scheduler in a Windows environment and the Automator in a Mac OS environment. To run a script on a daily basis in Windows, you will first have to create a .cmd file. A .cmd file is a file containing a script that can be run to perform specific tasks on the computer.

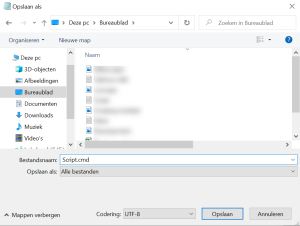

Create a .cmd file

To create a .cmd file, you can open a notepad and save the file as .cmd. You do this by choosing “Save As…” for the file and then giving the file a name ending with “.cmd”. It is important that you have checked the option “All files”.

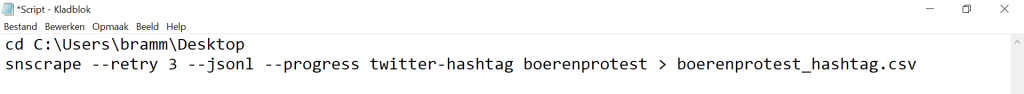

Filling the cmd file with a script

Because the .cmd file is still empty, you will have to fill it with a script. Suppose we wanted to run the earlier script to scrape the #farmersprotest hashtag, the script for that could be as follows:

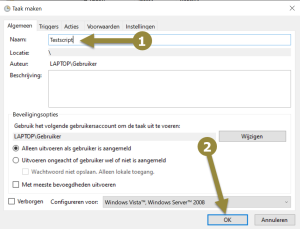

Schedule the cmd file in the task scheduler (1/3)

To create a task, open the “Task Scheduler/Planner” application via the start menu. Once the task scheduler has started, create a new task and enter a name for your task under the “General” tab (arrow 1). We have named our task “Test script”. Then click “OK” (arrow 2) and proceed to step 2.

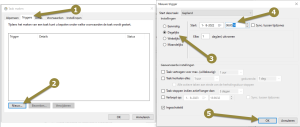

Schedule the cmd file in the task scheduler (2/3)

Click the “Trigger” tab (arrow 1) on “New” (arrow 2) to create a new trigger for your task. Now select the “Daily” option (arrow 3) and set a time for your script to run daily (arrow 4). Then click “OK” (arrow 5) to continue.

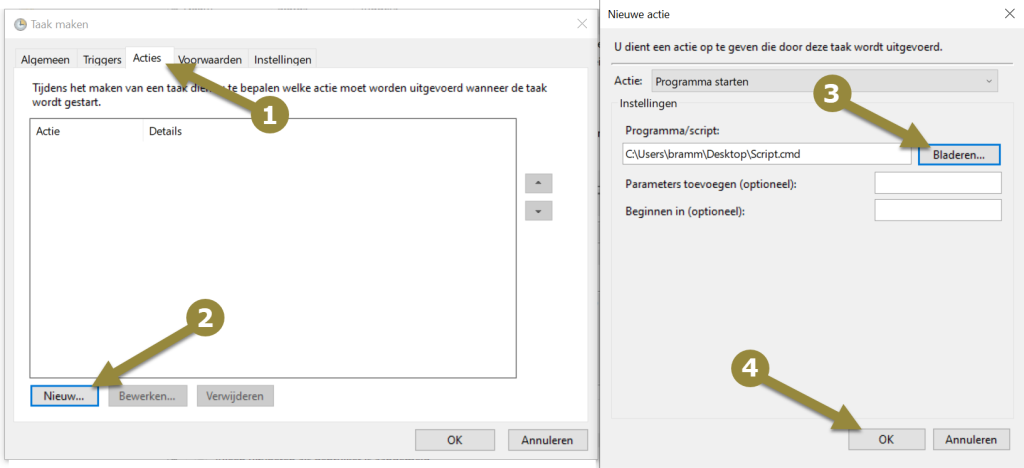

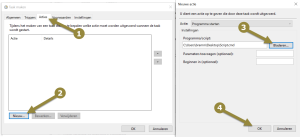

Schedule the cmd file in the task scheduler (3/3)

Click within the “Actions” tab (arrow 1) on “New” (arrow 2) to create a new action for your task. Search via “Browse” (arrow 3) to the script you created and then click “OK” (arrow 4). You have now set everything up and your task will be performed daily at the time of your choosing.

And what next?

With the above task you have ensured that a self-made script is executed every morning at a certain time. Now that you know how to run a script automatically, you can start creating more advanced scripts and tasks. Think of scripts that automatically download data, update files, and so on.

Can this also be done on a Mac?

Yes, of course. You will first need to create an executable file there (use “chmod +x“) and then you can open the Calendar via the Automator, get Finder items and run Finder items. You can therefore schedule scripts to be executed periodically via the agenda. For more information, please contact us.

Note: think about the legal framework!

Keep in mind that by automating searches you may retrieve larger amounts of data. Whether that is legally permissible depends entirely on the context of your research. So always consult, for example, a lawyer or Public Prosecutor before you get started!

More information?

Do you want to know more about automating searches? Then our OSINT training III (Expert) might be something for you. In this training we will cover the use of RSS Feeds, conditional logic, machine translations, APIs, web scrapers, open source tooling and much more!